|

3. Commands: (general, GBS, TI, JTV) | 4. Examples | 5. Data sets | 6. Software design | Source code | Javadocs | 7. batchScripts/ directory | 8. About | Description Figures: 1 | 2 | 3 | 4 ConvertGUI Figures: G.1 | G.2 | G.3 | G.4 | G.5 | G.6 | G.7 | SearchGUI Figures: S.1 | S.2 | S.3 | S.4 | S.5 | S.6 | S.7 | S.8 | S.9 | S.10 | S.11 | S.12 | S.13 | S.14 | S.15 | S.16 | S.17 |

File: ReferenceManual.htmlHTMLtools is a Java program to automate the batch conversion of tab-delimited spreadsheet type text files to HTML Web-page files. There are a variety of flexible options to make the Web page presentations more useful. It can also be used for editing large tables. This is described in more detail throughout this reference manual.

Additional command subsets were developed for specialized conversions (JTVconvert, GenBatchScripts, and TestsIntersections) and may be ignored for routine Web page generation in other domains where they don't apply.

The JTVconvert commands can re-map data array names in Java TreeView mAdb data set files to more user friendly experiment names as well as generate HTML Web pages to launch these converted JTV files for each JTV data set.

The GenBatchScripts commands may be used to generate HTMLtools batch scripts for subsequent processing given a list of data test-results files to convert and a tab-delimited tests descriptions file. It is able to use a table (prepared with Excel or some other source) that describes this data and can then use that for extracting and inserting information from various mapping tables into the generated Web pages.

The TestsIntersections commands will synthesize a tests intersection summary table and Web page as well as generating some summary statistics. It uses the same data used in the GenBatchScripts commands.

The Converter GUI mode starts a graphical user command interface (GUI) where they can specify either individual parameter scripts or a batch file list of scripts to be executed in the background with the results shown in the user interface including the ability to view the generated HTML files though a pop up Web browser.

The Search GUI mode starts a database search graphical user interface (GUI) to generated a "flipped table" ((see examples Figure S.14 and Figure S.16)) on a subset of the data from a pre-computed edited table database. They can specify filters on the rows and columns (data and samples subsets), and presentation options to generate a HTML file though a pop up Web browser.

|

Note: this software is released as an

OPEN SOURCE project HTMLtools on

http://HTMLtools.SourceForge.net/ with a small non-proprietary sample data

set that is bundled along with the program. This data set has already been

published on the public access NLM/NCBI GEO Web site. The original data set was

proprietary created for the Group STAT Project (GSP) and was created along

with the original conversion program, CvtTabDelim2HTML, to support the NIH

Jak-Stat Prospector Web site that is part of the Trans-NIH Jak-Stat

Initiative (http://jak-stat.nih.gov/)

accessible when opened up to the public in the future. Note: the initial release only includes GSP data that has been released to the public in NCBI's GEO database (currently one data set). As more GSP GEO data is released to the public,we will include some of them in the demo database to illustrate more of the features of HTMLtools. |

1. Gather sets of laboratory experiments in multiple laboratories

relating to the Jak-Stat gene pathway

|

v

2. Create Affymetrix microarray data (resulting in .CEL data files)

|

v

3. Create Inventory of relevant data and annotation of the data

in the GSP-Inventory.xls spreadsheets consisting of:

1) group arrays by experiment EG001, EG002,...EG00n;

2) a top level spreadsheet ExperimentGroups describing

all EG experiments.

|

v

4. Consolidate data in mAdb (Microarray DataBase system

mAdb.nci.nih.gov). Data is uploaded to each EGxxx subproject,

and normalized by pooled RMA or MAS5.

|

v

4.1 Perform t-test or fold-change tests on subsets of the data

that makes sense to compare saving results (+ and - changes

separately) as gene subsets.

|

v

4.2 Export tab-delimited (Excel) mAdb Retrieval Reports (MRR)

for each gene set for 1) just the arrays used in the test;

2) all samples in the database.

|

v

4.3 Compute and export the hierarchical clustered heat maps as

Java Tree View (JTV) .zip tab-delimited data sets for external

viewing for 1) just the arrays used in the test; 2) all samples

in the database.

|

v

5. Convert the MRR and JTV tab-delimited data to HTML Web pages

using the HTMLtools tools

|

v

6. Merge links to this generated data with Web pages in

the Jak-Stat Prospector Web server (and upload to the server).

Figure 1. shows an example of a data analysis processing pipeline to convert laboratory microarray data to Web pages that can be used in a the Jak-Stat Prospector Web site. Steps 4.1 and 4.2 could be run for a set of experiments as a batch job. Similarly, the set of files exported from mAdb could be batch processed with HTMLtools. Note that although the HTMLtools converter was developed for this project, the command structure is flexible enough that it could easily be used with other types of data. |

Section 7 describes creating and running the scripts for the batchScripts/ directory for creating Web pages.

1. Read the mAdb-TestsToDo.txt table that specifies all of the tests to be performed on subsets of the mAdb GSP database. For each test, these include: test name, samples being compared, test thresholds, test name and related annotation, tissue name, relative directory for the data (used both for input InputTree/ and output data Analyses/) generated directory trees. | v 2. Create lists of related tests by grouping by same tissue name. | v 3. Read additional mapping table files (ExperimentGroups.map, EGMAP.map, CellTypeTissue.map table to use in generating the summary web pages. | v 4. Create summary Web pages for each tissue type with links to Web pages for analyses we will generate and save in the Summary/ directory. | v 5. Generate all of the 'params .map' batch scripts, several for each test, and save them in the ParamScripts/ directory (see Figure 3 for details). It then copies all support files (above mapping tables), JTVjars/, data.Table/ and other files required when running converter to generate Web pages. | v 6. Generate a buildWebPages.doit file listing the params .map files to be processed with a subsequent batch run using the HTMLtools converter, and a Windows .BAT file, buildWebPages.bat v 7. Start the buildWebPages.bat batch job which generates the Web pages in the Summary/, Analyses/ and JTV/ directory trees. | v 8. Copy the generated Web pages to the Web server. Figure 2. shows an example of the batch script generation pipeline from a table describing a lists of tests that were run as a batch job on another analysis system. In this case, the analysis system is mAdb and it uses the same test "todo" file to specify the tests data.Table/mAdb-TestsToDo.txt as are used here with the GenBatchScripts processing. The mAdb data analysis and tab-delimited Excel data generated is shown in steps 4.1 and 4.2 (see Figure 1). In the GenBatchScripts processing, we first create a batchScripts/ directory and then fill it with various types of data described in this figure. |

tests (MRR & JTV) input: Converter output:

Tests for samples:

{testName}+FC.txt {testName}+FC.html

{testName}+FC-keep.html

{testName}-FC.txt {testName}+FC.html

{testName}-FC-keep.html

AND of above tests for ALL samples:

{testName}+FC-ALL.txt {testName}+FC-ALL.html

{testName}-FC-ALL.txt {testName}-FC-ALL.html

JTV for test samples:

{testName}+FC-JTV.zip {testName}+FC-JTV/

{testName}+FC-JTV.zip

{testName}+FC-JTV.html

{testName}-FC-JTV.zip {testName}-FC-JTV/

{testName}-FC-JTV.zip

{testName}-FC-JTV.html

JTV above for AND of above tests for ALL Samples:

{testName}+FC-ALL-JTV.zip {testName}+FC-ALL-JTV/

{testName}+FC-ALL-JTV.zip

{testName}+FC-ALL-JTV.html

{testName}-FC-ALL-JTV.zip {testName}-FC-ALL-JTV/

{testName}-FC-ALL-JTV.zip

{testName}-FC-ALL-JTV.html

Figure 3. shows the set of 8 mAdb results files and 18 converter HTML and JTV generated for each test testName in the mAdb-TestsToDo list. For example, if the test is "EG3.1-test-2", then in the above figure, replace {testName} with EG3.1-test-2, etc. The "+FC" indicates a positive fold-change, and the "-FC" a negative fold-change. The file with "-keep" are gene lists with no expression data. The GenBatchScripts option for the converter generates parameters .map batch scripts for each of these converted files. |

1. Edit the <GSP-Inventory Excel workbook to annotate the set of Affymetrix .CEL files where we assign the next free Experiment Group EGnnn, simple GSP ID, GSP ID, etc. | v 2. Upload the Affymetrix .CEL file data to the GSP mAdb database and normalized the new samples using the pooled RMA data for the base GSP database. | v 3. Add new test to-do in the mAdb-TestsToDo.xls Excel workbook and upload the new test list to mAdb. | v 4. Run the batch tests in mAdb resulting in Excel and JTV data sets that are exported for conversion to Web pages. | v 5. Process these data using the HTMLtools converter into HTML pages and converted data for the Web server. | v 6. Upload these Web pages and data to the NIDDK Jak-Stat Prospector staging area for the jak-stat.nih.gov server. Figure 4. shows shows shows the top-level procedure used for adding new Affymetrix .CEL file data sets to the GSP database and Jak Stat Prosector Web server. In addition, if new gene identifications are made to some of the affymetrix probes (Feature IDs), running steps 4) through 6) can update these identifiers. |

Java TreeView (JTV) DocumentationJava TreeView is an open-source (jTreeView.sourceforge.net) Java applet that mAdb uses to view heatmaps of gene sets. We also use Java TreeView for looking at data snapshots we have taken of the mAdb data.Java TreeView may be downloaded to run as either a standalone application or Java applet from http://jTreeView.sourceforge.net/. The 2004 journal paper by Alok J. Saldanha gives an overview of Java TreeView: "Java Treeview�extensible visualization of microarray data" Bioinformatics 2004 20(17):3246-3248.There is additional Java TreeView documentation Web page includes links to examples, an FAQ, a user guide, Alok J. Saldanha's disertation describing additional aspects of Java Treeview. NOTE: The Java TreeView applet has been shown to work on Mac OSX, XP and Win2K.

|

java -Xmx256M -classpath .;.\HTMLtools.jar \

HTMLtools -inputDir:dataXXX -outputDir:html (etc.)

java -Xmx256M -classpath .;.\HTMLtools.jar \

HTMLtools dataXXX/paramsXXX.map

or (in Unix, MacOS-X, or Cygwin):

java -Xmx256M -classpath .;./HTMLtools.jar \

HTMLtools dataXXX/paramsXXX.map

or

java -Xmx256M -classpath . -jar HTMLtools.jar \

dataXXX/paramsXXX.map

where the dataXXX/ directory and the paramsXXX.map file are

replaced by your data directory and params map file. Then, the

generated HTML files will be in the html/ directory or whatever

output directory is specified by the -outputDirectory switch

in the paramsXXX.map file. This command line tells Java to run the

program with 256 Mbytes of memory. For very large files, you may need

to increase this memory size. For very large data sets, even that

may cause problems and you may not be able to convert them since

for the default mode, the Table is loaded into memory before being

edited. Some commands such as -fastEditFile are designed to work with

very large files and process them as a buffered I/O pipeline and so

don't load the Table into memory.

java -Xmx256M -classpath .;.\HTMLtools.jar \

HTMLtools -batchProcessing:batchList.doit

java -Xmx256M -classpath .;.\HTMLtools.jar \

HTMLtools data.GBS:params-genBatchScripts.map

It is invoked from the command line as:

java -Xmx512M -classpath .;.\HTMLtools.jar HTMLtools -gui

or using the M.S. Windows script (similar for Mac and Linux):

cvtTxt2HTML-GUI.bat

This is illustrated in the following screen shots.

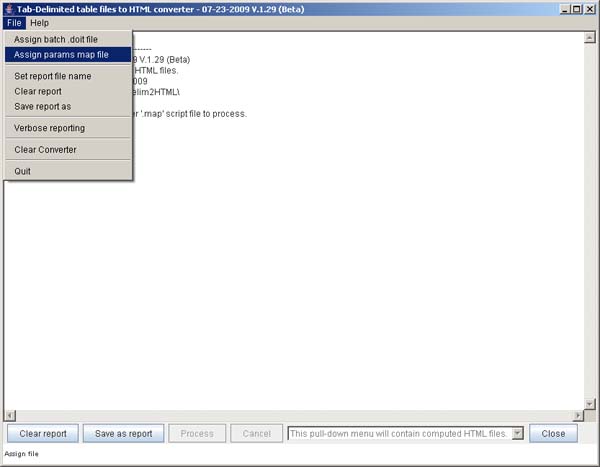

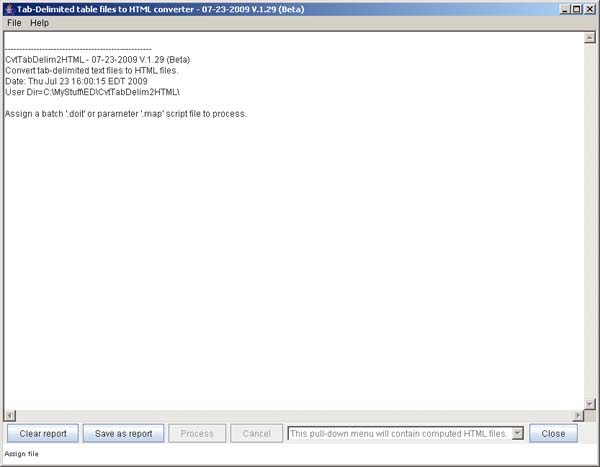

Figure G.1 This shows the Initial graphical user interface. Using the File menu, the user should select either a batch ".doit" file or a parameter ".map" script file. The File menu is shown in Figure G.2

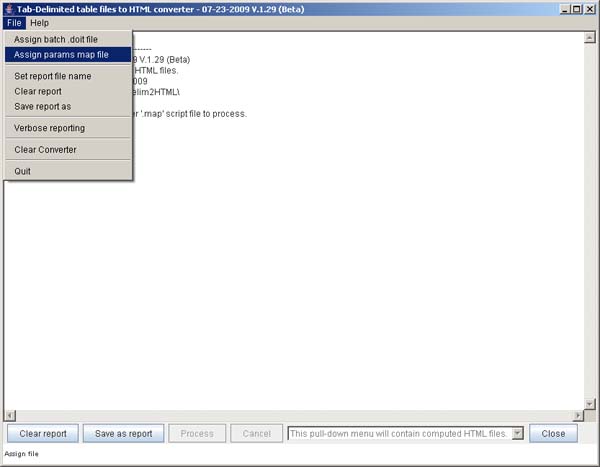

Figure G.2 This shows the File menu, the user should select either a batch ".doit" file or a parameter ".map" script file.

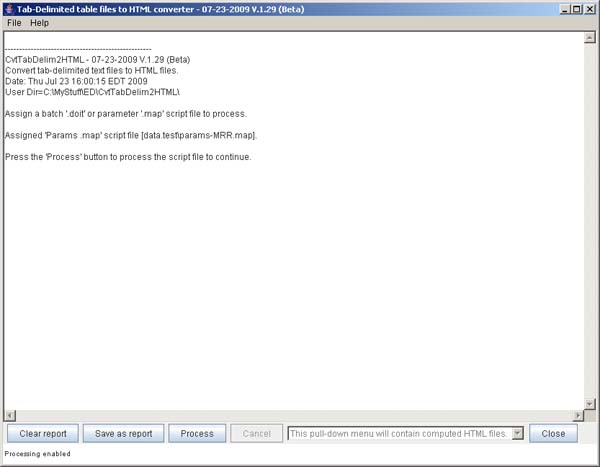

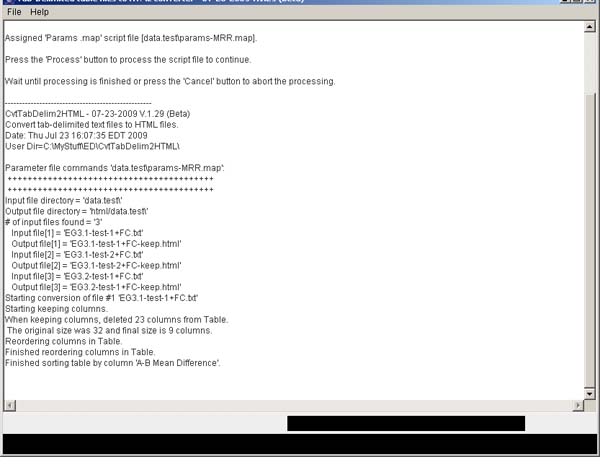

Figure G.3 This shows GUI after selectiong the script to process. The user then presses the Process button to start processing. The next Figure G.4 shows the program during processing.

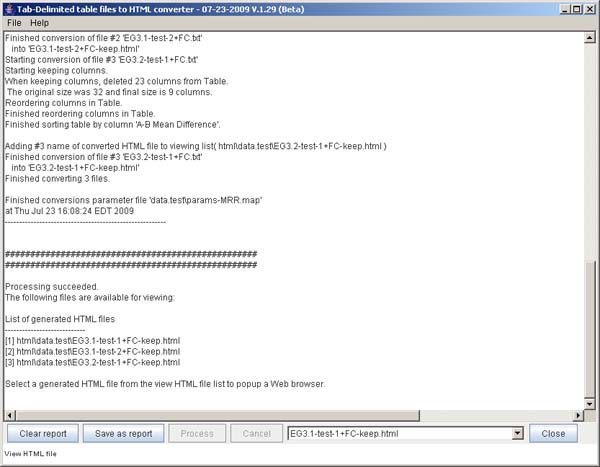

Figure G.4 This shows GUI during Processing. The output from the converter is shown in the Report window in the middle of the GUI. This can be saved into a .txt file or cleared if desired. The next Figure G.5 shows the program after processing is finished.

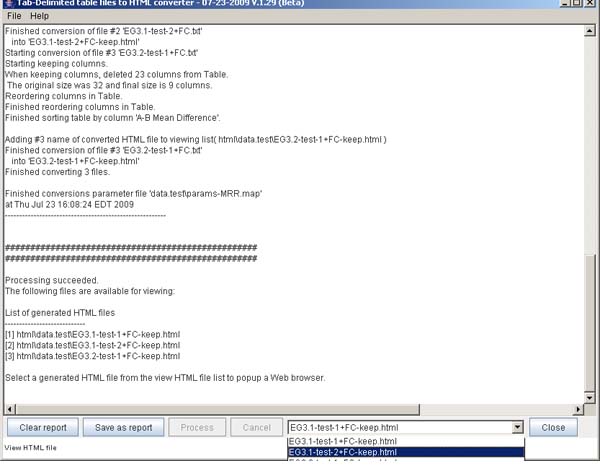

Figure G.5 This shows the GUI after Processing. The output from the converter is shown in the Report window in the middle of the GUI. This can be saved into a .txt file or cleared if desired. The next Figure G.6 shows the program after processing is finished.

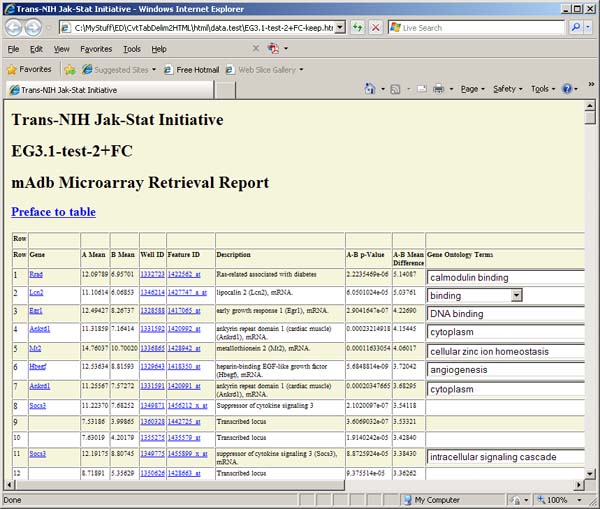

Figure G.6 This shows the GUI generated HTML options to choose to view. Selecting one of them will popup a Web browser with that file (see Figure G.8).

Figure G.7 This shows the popup Web browser window for the the selected generated HTML file you chose to view.

However, if you must explicitly run the Java interpreter, you can do it on the command line (invoked various ways on different operating systems) by typing

java -Xmx256M -classpath . -jar searchGui.jar

or

java -Xmx256M -classpath .;.\HTMLtools.jar HTMLtools -searchGui

This line was put into a Windows .BAT file (SearchGUI.bat)

that can be run by clicking on this batch file. Notice that the

-Xmx256M specification is available to increase or decrease

the amount of memory used. The default memory may vary on different

computers. So you can use the script for force it the program to

start with more or less memory if you run into problems.

You also need to select the set of samples to use by selecting one or more Experiment Groups (see the Jak-Stat Prospector Web site for details on Experiment Groups). In the 2. Select one or more 'Sample Experiment Groups' window, selecting ALL is the default and will select all 18 arrays. You can click on individual Experiment Groups. To select a range, click on the first one that starts the range and then hold the SHIFT key and click the end of the range. To select non-adjacent Experiment Groups, hold the CONTROL key as you select different groups. Pressing the Reset button, will clear these two windows.

The File menu offers additional some data input options. You do not need to use any of these menu options to use the program. However, they can be useful for customizing your search results.

You can save the text output generated during processing that is shown in the 3. Processing Report Log scrollable text area at the bottom of the window. Several File menu commands are used with this including: Clear Report-Log, and Save Report-Log As. Note that the Clear report, and Save report as commands are also available in the bottom as buttons with the same names.

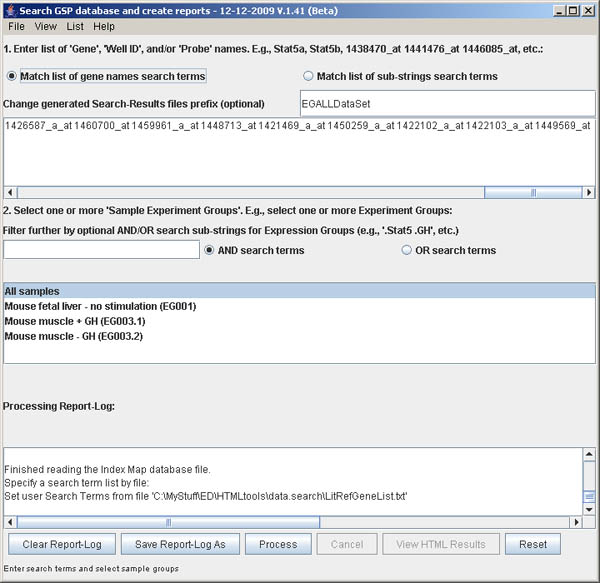

You must specify a list of data search terms in the upper window 1. Enter list of Gene, Well ID or Probe ID.... The simplest way to specify these terms is to either cut and paste or type them into the window. To help demonstrate and simplify specifying the search terms, there are two commands in the File menu for setting the list: Set demo term-list data to enter a short list Stat5a Stat5b 1438470_at 1441476_at 1446085_at. The other is Import user term-list data from a file. The file can be a list of Genes or Feature IDs (probes) or Well IDs or any combination. Several example files are provided including data.search/LitRefGeneList.txt file, data.search/testGeneList.txt, and data.search/testFeatureIDList.txt. The first is a tab-delimited data with all 3 fields. The latter two examples just have lists of Genes or Feature IDs.

After you finish a search, you can do another one. The File menu options: Reset converter or the Reset button at the bottom of the Window will reset the search specification and make the Process button available.

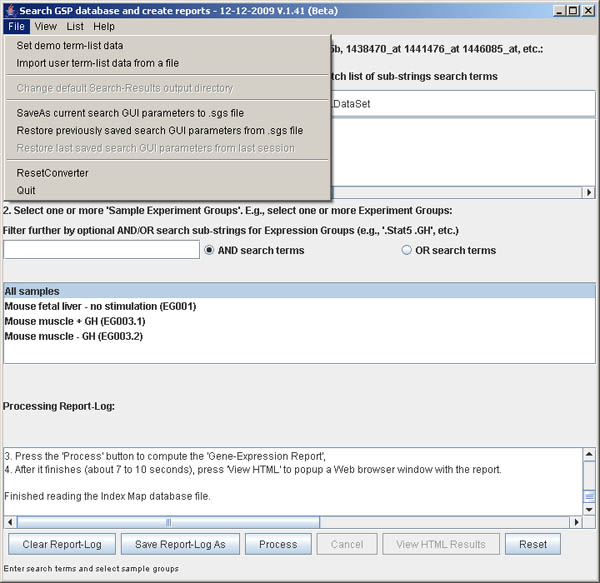

Figure S.2 This shows the menu options in the File menu.

This menu offers additional processing options described above.

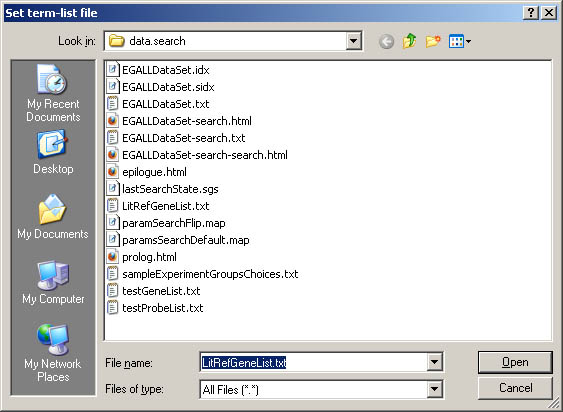

Figure S.3 This shows the popup file browser for specifying a list

of gene/probes in a .txt file using the

(File | Import user term-list data from a file) menu option.

If the testGeneList.txt file was selected, the next figure shows the

new term-list.

Figure S.4 This shows the new term-list specified from importing

the gene list from a file (previous figure)..

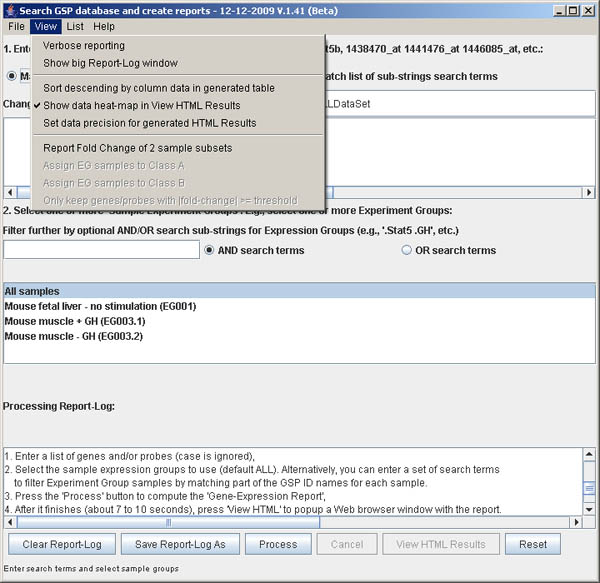

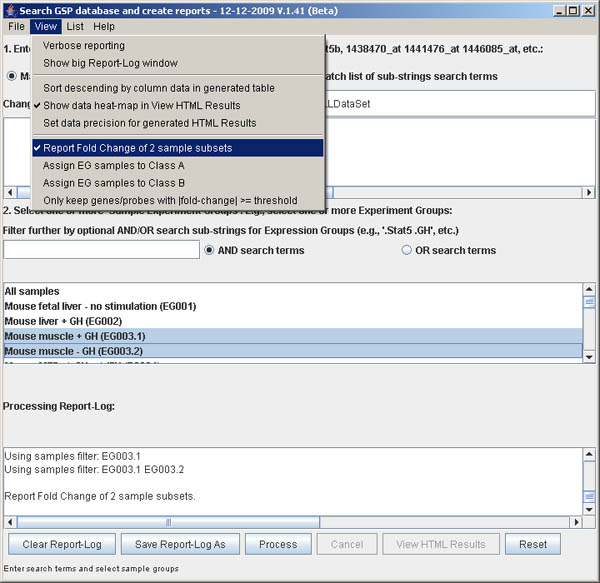

The View menu offers additional some data input

options. The menu Verbose reporting check box

could be enabled it you want to see the details on the search and

table generating as it progresses in the Report-Log window.

When the search results table is being generated, you can modify

it's presentation using other view options:

Sort descending by column data in generated table

(see Figure S.6 for more details).

The Show data heat-map in View HTML to show the generated results

table as a colored heatmap (see Figure S.14 for

an example) This is the default. Finally, Set data precision for generated

HTML to adjust the number of digits presented in the generated table

(0 sets it to no fraction, whereas the default -1 shows the full precision

of available in the data).

Figure S.5 This shows the menu options in the View menu.

This menu offers additional processing options described above.

Figure S.6 This shows the pop up query to let you define the sort name

to specify the generated table gene or gene probe ID column to be used for

the sort process. This will then use the gene expression data for the gene

probe you specified to sort the sample rows for the entire table. The default

is not to sort the data, but to use the sample order of the samples in the

expression groups you have specified. This pop up window is invoked from the

(View menu | Sort descending by column data in generated table).

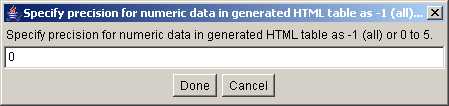

Figure S.7 This shows dialog box (View menu | Set data precision

for generated HTML). The default is -1 which prints all digits available

in the generated HTML table. Setting it to 0, removes all fractions (used in

this example).

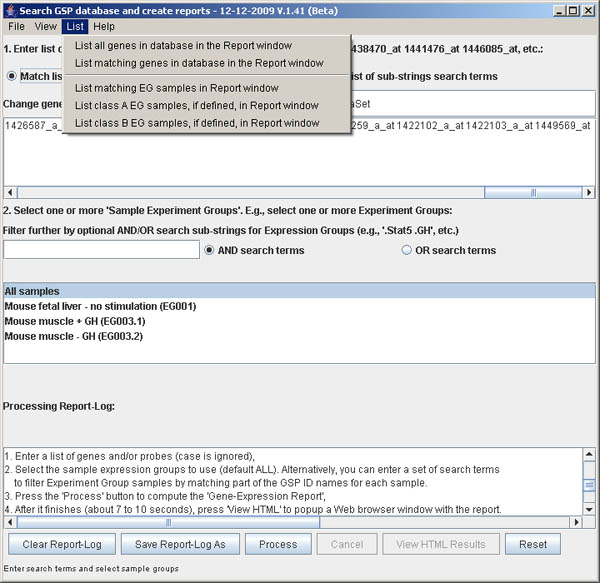

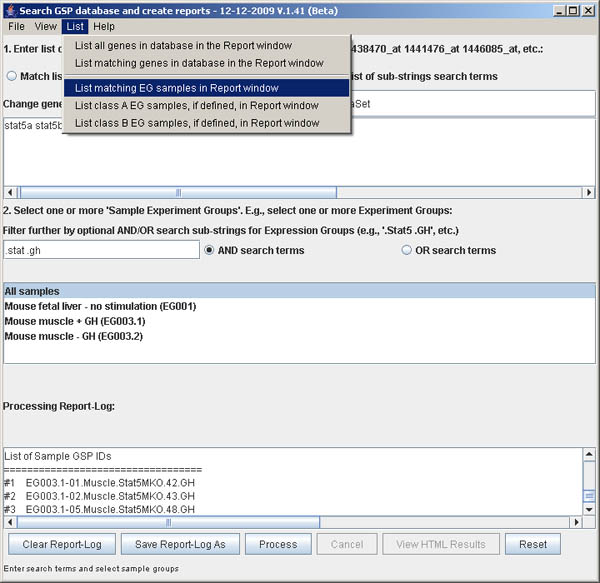

Figure S.8 This shows the menu options in the List menu.

You may list some of the data matching the gene/probe search terms or

EG sample search terms prior to doing the search. The first option is

to list all 45K gene/probe IDs. The second menu option lets you specify

gene/probe search terms either using the exact gene names or using

substrings. All genes/probes matching will be reported. The third menu

option lets you specify EG samples search terms either using selected

EG groups from the list. In addition, this is filtered by a list of substrings

which can be qualified as both being required (AND) or either being required (OR)

if the EG sample search terms are specified. All lists are reported in

the bottom scrollable Report Window.

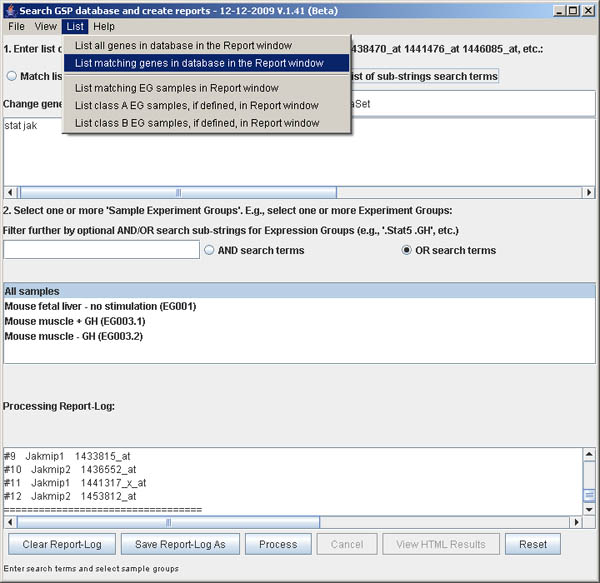

Figure S.9 This shows results from

List menu | List matching genes in database in the Report

window .

The genes/probes matching the substring terms "stat" and "jak"

in the 45K probe database are listed in the scrollable

Processing Report log at the bottom of the window.

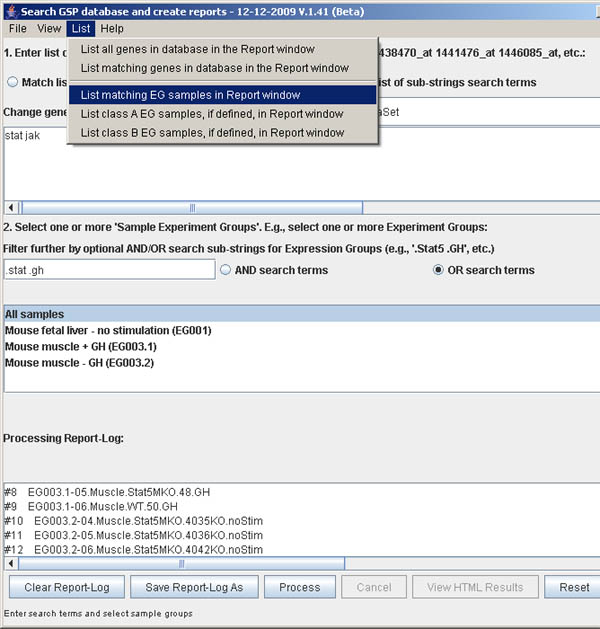

Figure S.10 This shows results from

List menu | List matching EG samples in database in the Report

window using the OR condition.

The Expression Group (EG) samples matching the substring terms

".treated" or ".untreated" in the 151 sample database are listed

in the scrollable Processing Report log at the bottom

of the window. It searches within the EG sample groups you have

selected. In this example, we have selected "All samples", but any

other subset could be used. Also, we required an OR

condition to select samples where either of the search terms

are present.

Figure S.11 This shows results from

List menu | List matching EG samples in database in the Report

window using the AND condition.

The Expression Group (EG) samples matching the substring terms

".stat" and ".GH" in the 18 sample database are listed

in the scrollable Processing Report log at the bottom

of the window. It searches within the EG sample groups you have

selected. In this example, we have selected "All samples", but any

other subset could be used. Also, we are required an AND

condition to select samples where both search terms are present.

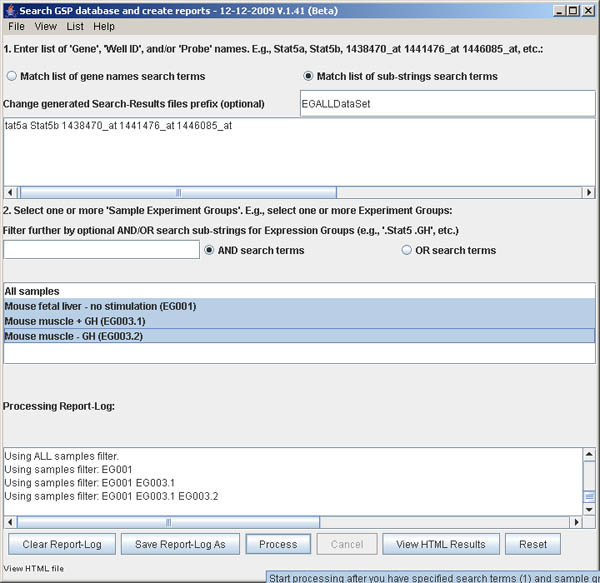

Figure S.12 This shows the Search window before processing

is finished and the Process button is made available. Pressing

it will start processing. This will typically take 7 to 10 seconds, so be

patient. Note that the View HTML button is disabled and will

be enabled after processing is completed.

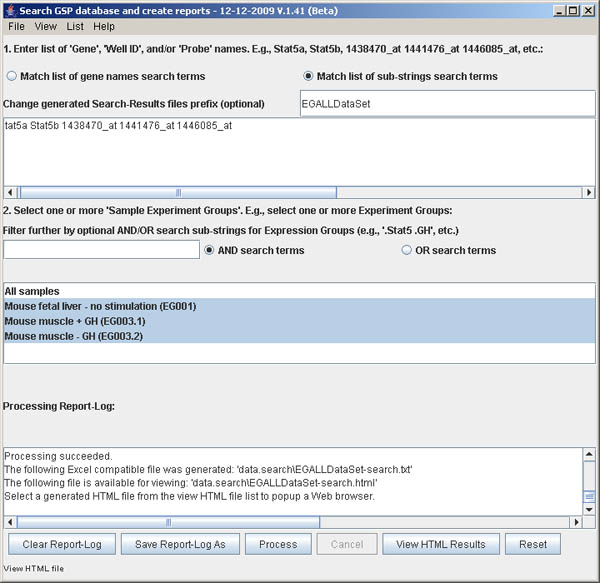

Figure S.13 This shows the Search window after processing

is finished and the View HTML button is made available. Pressing

it will pop up a local web browser with the data shown in the next

figure. Note that the Process button is now disabled and will

be until you reset the converter using the Reset button.

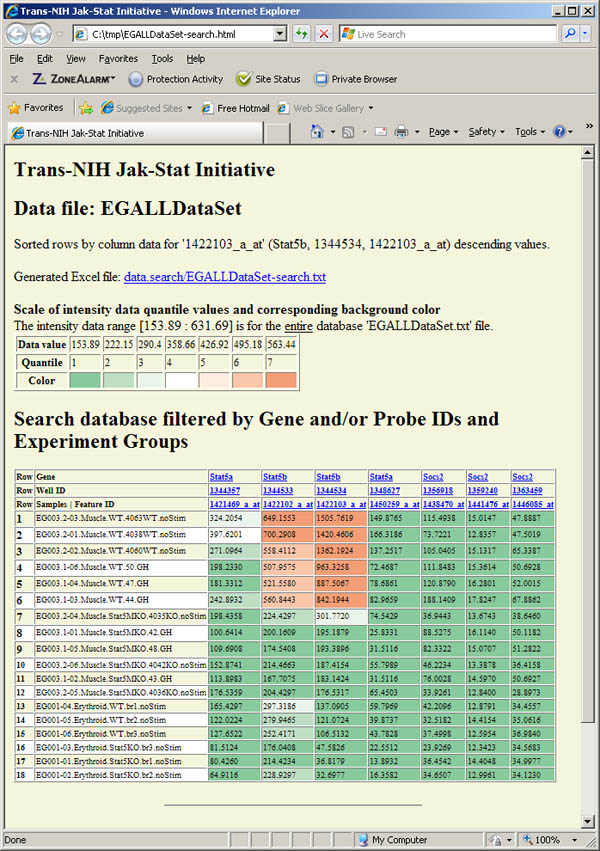

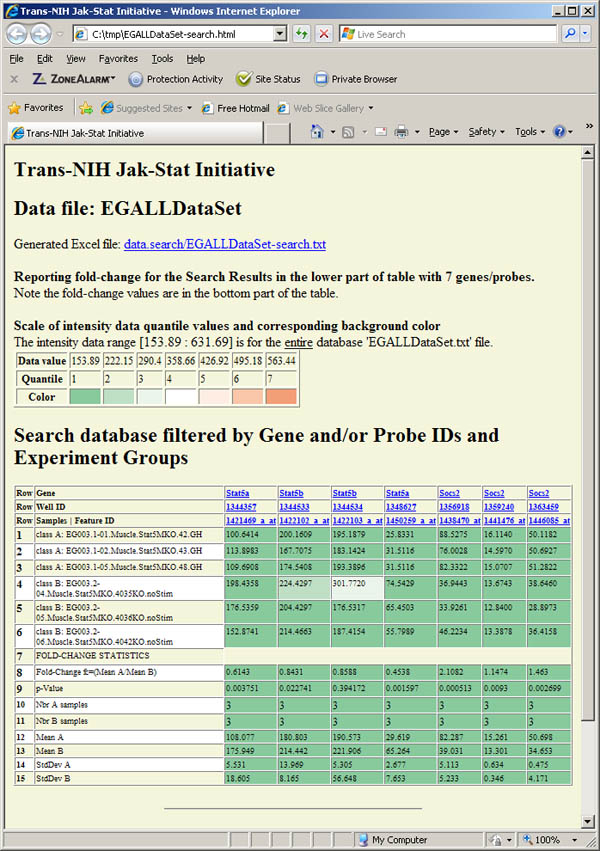

Figure S.14 This shows the generated table Web page created

by the above search and viewed when the View HTML button

was pressed. The colored cells reflect the quantiles that the data belong

to and are based on (max, min, mean, stddev) statistics computed over

the entire database. The data was sorted by the third probe (Stat5b/1422103_a_at)

and the numeric data was listed with no fractions to make it easier to "eyeball"

the data.

Figure S.16 This shows the report generated that includes the intensity data

followed by the fold-change and statistics for that data generated using the data

in the previous figure.

Three additional subsets of specialized commands that are described

separately: the 3.1 GenBatchScripts

commands, 3.2 Tests-Intersection

and the 3.3 Java TreeView commands.

Section 7 describes creating and running the scripts for the

batchScripts/ directory for creating Web

pages.

The parameters specify the data used in the output files generation include

several directories in the batchScripts/

directory:

Additional data files are used when the -genBatchScripts command is run

including:

There may be multiple instances of the -genCopySupportFile, -genParamTemplate,

-genSummaryTemplate switches.

One could experiment with these parameter files adding or removing various

options such as -dropColumn, -reorderColumn, -sortTable, etc.

Adding fold-change statistics to the generated HTML report

The procedure used to compare the fold change of the Stat5 subsets for the

specified genes (I used the demo set of genes/probes) for the two sets of

sample EG003.1 (Stat5KO+GH) and EG003.2 (Stat5KO-GH), called classes A and B

here and in the SearchGUI menus and report.

Procedure

The generated HTML and .txt files are attached in this email. Note that the

fold-change results are appended to the regular table and the the class A and class

B samples have those identifiers prefixed to their sample names. Note that the

fold-change report is in the second half of the report with the statistics reported

being computed on the column data for each gene/probe. Note: Sorting is can't be

enabled if generating the fold-change report data since it would cause problems with

the reporting format.

Figure S.15 This shows the menu options in the View menu after

the (View | Report Fold Change of 2 sample subsets) option was enabled.

Note the two new commands that are activated: Assign EG samples to Class A and

Assign EG samples to Class B.

e.g., set 2. filter sample search term to ".stat", select EG003.1 in the scrollable

list, then select

(View menu | Assign EG samples to Class A) to define class A samples

e.g., set 2. filter sample search term to ".stat", select EG003.2 in the scrollable

list, then select

(View menu | Assign EG samples to Class B) to define class B samples.

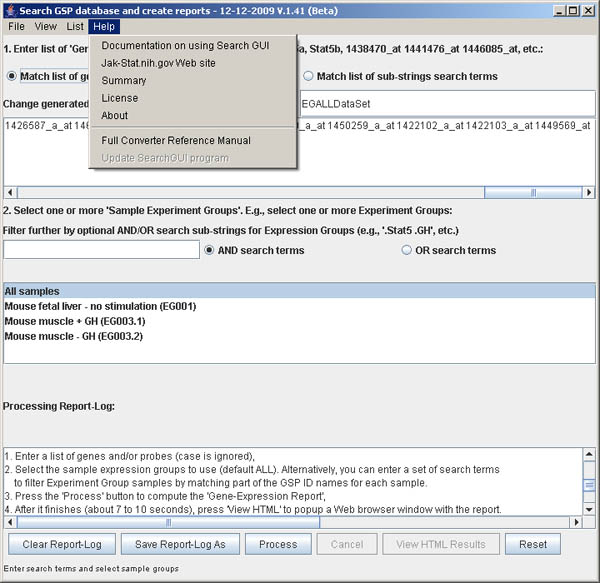

SearcGUI Help

There are several Web pages that contain the documentation in the Help menu.

Figure S.8 This shows the menu options in the Help menu.

This menu offers additional processing options described above. This

document is the first entry Documentation on using the Search GUI.

3. COMMAND LINE SWITCHES

Command line switches are case-sensitive and of the form '-switchName:a1,a2,...,an'

where: 'switchName' is the minimum number of characters in the switch

shown below, and 'a1', 'a2', etc. are the comma-separated switch arguments

with no spaces between the commas and the arguments. Use double quotes

in arguments with spaces. Tabs are not allowed and all switches must

be on the same line unless either the switches are in a parameter file in

which case they are on separate lines, or the command lines is entered

using line continuation characters for the operating system (e.g., '\'

in Unix, etc). Switches with additional arguments require the comma-separated

arguments after the ':'. We denote the arguments as being within '{'...'}'

brackets. Note you do not include the '{' or '}' brackets in the

actual switches - it just denotes that is some argument.

There may be multiple instances of some of the switch commands

including: -files, -hrefData, -dropColumn, -keepColumn, -reorderColumn,

-sortTableByColumn, -mapDollarsigns, -mapQuestionmarks, -copyFile,

-copyTree, -genCopySupportFile, -genParamTemplate, -genSummaryTemplate,

-genCopyfile, -genTreeCopyData, -dirIndexHtml.

{parameter command file}

[this argument does not start with '-' and is thus

assumed to be a parameter command file. It will then

get all of the command switches from this file if

present. Examples of command file contents are in

the EXAMPLES section below. By convention, we name

these command text files 'paramXXX.map' with a '.map'

file extension and keep them in the same directory

that we specify with -inputDirectory. We refer to these

fileas throughout this document as "params .map" files.

The .map file extension is used for tab-delimited text

files that we do not want to convert. We only convert

tab-delimited text files with .txt file extensions.]

-addE:{opt. epilogue file name}

['-addEpilogue:{opt epilogue filename}' add an

epilogue HTML file in inputDir or user directory

(common epilogue for all conversions). If the

keywords $$DATE$$ or $$INPUTFILENAME$$ is in the

file, it will substitute today's date or file name

respectively. $$FILE_ZIP_EXTENSION$$ will substitute

the file name with a ".zip" extension. Default name is

'epilogue.html'. Default is to not add an epilogue to

the HTML output.]

-addO:{postfix name}

['-addOutfilePostfix:{postfix name}' add a postfix

name to the output file before the .html. E.g., for

an output file 'abc.html', with a postfix name of

'-xyz', the new name is 'abcxyz.html'. This can

be useful if you are mapping the same input file

by several different param.map files and saving

them all in the same html/ output directory.]

-addP:{opt. prolog file name}

['-addProlog:{opt. prolog file name}' to add a prolog

HTML file in inputDir or user directory (common prolog

for all conversions. If the keywords $$DATE$$ or

$$INPUTFILENAME$$ is in the file, it will

substitute today's date or file name respectively.

$$FILE_ZIP_EXTENSION$$ will substitute the file name

with a ".zip" extension. Default name is 'prolog.html'.

The default is to not add a prolog to the HTML output.]

-addRow

['-addRowNumbers to preface each row with sequential

row numbers. Default is to not add row numbers.]

-addT

['-addTableName' to add TABLE name to HTML. Default

is to not add the name.]

-allowH

['-allowHdrDups' to allow duplicate column fields

in the header. Default is to not allow duplicates.]

-alt:{color name}

['-alternateRowBackgroundColor:{c}' alternate the

background row cell colors in the <TABLE>.

Default is no color changes.]

-batchP:{file of param specs, opt. new working dir}

['-batchProcess:{file of param specs, opt. new working dir}'

batch process a list of param.map type files specified

in a file. If the {opt. new working dir}value is

specified, it will change the current working directory

of the HTMLtools when runnning -batchProcess so

that you can specify it run in a particular environment.

No other switches should be used with this as they will

be ignored. If errors occur in any of the batch jobs,

the errors are logged in the HTMLtools.log file

and it aborts that particular job and continues on

to do the next job in the batch list. Default

is no batch processing.]

-concat:{concatenatedDataFile,opt."noHTML"}

['-concatTables:{concatenatedDataFile,opt."noHTML"}' to

create a new tab-delimited {concatenatedDataFile} (e.g.,

".txt" or ".map" file) and a .html output file using the

base address (without the ".txt" or ".map" file extensions)

of the {concatenatedDataFile} and if the "noHTML" option

is not specified. The data is from the set of concatenated

input text files data if-and-only-if they have exactly the

same column header names. The -outputDir specifies where

the files are saved. The input files are not converted

to HTML files. Default is to not concatinate the

input files. The -makeMapFile switch can be used

along with the concat switch to make a map file with fewer

columns.]

-copyFile:{sourceTreeDir,destDir}

['-copyFile:{srcFile,destFile}' to copy an input source

file {srcFile} to a destination subdirectory {destDir}.

There can be multiple instances of this option. Default is

to not copy tree data.]

-copyTree:{sourceTreeDir,destDir}

['-copyTree:{srcTreeFiles,destPath}' to copy an input

source tree subdirectory to a destination subdirectory.

There can be multiple instances of this option. Default is

to not copy tree data.]

-dataP:{nbr digits precision}'

[-dataPrecisionHTMLtable:{nbr digits precision}' sets the

precision to use in numeric data for a generated HTML file.

The table must be a numeric data table (such as generated

using the '-flipTableByIndexMap' option. If the value is < 0,

then use the full precision of the data (as supplied in the input

string data). If {nbr digits precision} >= 0, then clip digits

as required.]

-dirIndexHtml:{dir,'O'verride or 'N'ooverride}

['-dirIndexHtml:{dir,'O'verrideor 'N'ooverride}' to create

"index.html" files of all of the files in the specified directories

in the list of directories specified with multiple copies

of this switch. It is useful when copying a set of directories

on a Web server that does not show the contents of the directory

if there is no index.html file. In addition, if the corresponding

flag'Override', then override the "index.html" file if it

already exists in that directory otherwise don't generate the

"index.html" file. Do this recursively on each directory.

Default is no index.html file generation. Multiple copies

of the switch are allowed.]

-dropColumn:{column header name}

['-dropColumn:{column header name}' to specify a

column to drop from the ouput TABLE. There can be

multiple instances of this switch.]

-exportB:{opt. big size threshold}

['-exportBigCellsToHTMLfile:{opt. size for big}'

to save the contents of big cells as separate

HTML files with a prefix

'big-R<r>C<c>-<outputFileName>.

So for a (r,c) of (4,5) and a file name 'xyz.html',

the generated name would be 'big-R4C5-xyz.html'.

The big size threshold defaults to 200. Default is no

exporting of big cells.]

-extractR:{colName,rowNbr,resourceTblFile,htmlStyle}

['-extractRow:{colName,rowNbr,resourceTblFile,

htmlStyle}' to get and lookup a keyword in the

table being processed at (colName,rowNbr) and then

to search a resourceTblFile for that keyword. If

it found, then it will extract the header row and

the data row from the resource file and create

HTML of htmlStyle to insert into the epilogue.

If $$EXTRACT_ROW$$ is in the epilogue, then

replace it with the generated HTML else insert

the HTML at the front of the epilogue. The

htmlStyles may be DL, OL, UL and TABLE. Default

is no row extraction.]

-fastE:{outTblFile}

['-fastEditFile:{opt. output file} to allow processing

input file data line by line table that does not

buffer the data in a Table structure, but remaps each

line on the fly using -mapHdrNames,

{-dropColumns or -keepColumns} followed by

-reorderColumns. Data is written immediately to an

output stream so it can handle huge files. Because

it is sequential, it can't do a -sortRowsByColumnData.

This would generally be used to generate a tab-delim

.txt files that can be random accessed. HTML table

generation is disabled. It is used instead of

'-saveEditedTable2File:{outTblFile,opt. "noHTML"}'

and overides the -saveEditedTable2File options.

Default is not to do a fast edit.]

-files:{f1,f2,...,fn}

['-files:{f1,f2,...,fn}' to specify list of files

here rather than all in all of the files in the

inputDir. You can have multiple instances of this

switch.]

-flipA:{flipAclass}

['-flipAclass:{flipAclass}' to specify the list of EG samples used

in class A if reporting the fold-change data in the flipped Table report.

Default is no list of EG samples is specified.]

-flipB:{flipBclass}

['-flipBclass:{flipBclass}' to specify the list of EG samples used

in class B if reporting the fold-change data in the flipped Table report.

Default is no list of EG samples is specified.]

-flipC:{flipColumnFile,flipColumnName} or -flipC:{*LIST*,flipColumnName,v1,v2,...vn}

['-flipColumnName:{flipColumnFile,flipColumnName}'

to specify the source Table column name to use in

filtering which row data to use in the

'-flipTableByIndexMap' operation. An alternative specification

is '-flipColumnName:{*LIST*,flipColumnName,v1,v2,...vn}'

where the values are listed explicitly. Multiple instances

of this '-flipColumnName' switch are used to specify

the header entries by '{flipColumnName}' of the new

flipped table. If the {flipColumnFile}' files exist,

they are used to filter the {flipDataFile} row entries.

Only the rows of the original Table that match one

of the {column-data-list} entries will be transposed.

Default is to transpose all rows unless the filter

files are specified.]

-flipD:{flipDirectory}

['-flipDirectory:{flipDirectory}' to specify the directory to

save the generated flipped Table. Default is the data.search

directory.]

-flipE:{flipExcludeColumnName}

['-flipExcludeColumnName:{flipExcludeColumnName}' to specify the

column names from the source Table exclude from the final flipped

Table using the '-flipTableByIndexMap' operation. Multiple instances

of this switch are allowed. Default is to include all data Table

columns unless the filter is specified.]

-flipO:{colHdrName1,colHdrName2,...,colHdrNameN}

['-flipOrderHdrColNames:{colHdrName1,colHdrName2,...,colHdrNameN}'

to specify the list of columns in the source Table that will be

used to create the flipped Table multi-line header entries.

This option must be specified when using the '-flipTableByIndexMap'

operation.]

-flipRowF:{flipRowFilterNamesfile} or

-flipRowF:{*LIST*,name1,name2,...,nameK}

['-flipRowFilterNamesFile:{flipRowNamesFile}' or the alternate

'-flipRowFilterNamesFile:{*LIST*,name1,name2,...,nameK}'switch specifies

the source Table column names to use in filtering which source sample

columns data will be used as rows in the finalflipped Table using the

'-flipTableByIndexMap' operation. Analternative specification is

'-flipRowFilterNamesFile:{*LIST*,name1,name2,...,nameK}' where the values

are listed explicitly. If the "*LIST*" name is used instead of the file

name, then the rest of the switch specifies the row names. Only the

columns of the original Table that partially match one the

{flipRowNamesFile} entries will be transposed. Default is to transpose

all data Table columns unless the filter is specified.]

-flipRowGSP:{list of filter substrings}

['-flipRowGSPIDfilters:{list of filter substrings}' is an optiona

list of substring filters used to filter Experiment Group sample name

rows in the flipped table computation when using the

'-flipTableByIndexMap:{flipDataFile,flipIndexMapFile}' switch. It matches

case-independent substrings in the GSP ID names for the samples where

if more than one substring is specified, then they must all be found

for that sample to be used (e.g., ".Stat .GH" requires a ".Stat" and

a ".GH" to be present). Default is no filtering.]

-flipSa:{flipSaveOutputFile}

['-flipSaveOutputFile:{flipSaveOutputFile}' is the alternate

output (HTML and TXT) file name to use when generating the

flipped Table using the

'-flipTableByIndexMap:{flipDataFile,flipIndexMapFile,(opt)maxRows}'

switch. Default is to generate the output file name from the

input file base name, adding a postfix using the

'-addOutfilePostfix:{postfix name}' or "-flipped" default

postfix. If the switch is not specified, it will use the base

input file name. (See Example 14

for an example of it's usage.) ]

-flipT:{flipDataFile,flipIndexMapFile,(opt)maxRows}

['-flipTableByIndexMap:{flipDataFile,flipIndexMapFile,(opt)maxRows}'

to generate a transposed file using random access file indexing to

create a multi-line header (1 line for each column name in the

list) using the list of columns previously specified with the

-flipColTableList and -flipRowTableList filters. It uses the index-map

created with '-makeIndexMapFile:{colName1,colName2,...,colNameN}'

command. It analyze the index map Table and then uses

all columns before the ("StartByte", "EndByte") columns

to define the flipped Table header. See the '-flipColTableList' and

-flipRowTableList to restrict which flipped column data to use.

See the '-flipRowTableList' to restrict which flipped row data to

use. Default is to not flip the Table.]

-flipU:{colHdrName1,colHdrName2,...,colHdrNameN}

['-flipUseExactColumnNameMatch:{TRUE | FALSE}' to specify the exact

match filter flag. If an exact match, then match '-flipColumnName:{names}'

exactly, otherwise do look for substring matches. Ignore case in

both instances. This option may be specified when using the

'-flipTableByIndexMap' operation. The default if no

flipUseExactColumnNameMatch is specified is "AND".]

-font:{-1,-2,-3,-4,+1,+2,+3,+4}

['-fontSizeHtml:{font Size modifier}' to change

the <TABLE> FONT SIZE in the HTML file.]

-gui

['-gui' to invoke the graphical user interface version

of the converter. See

Using the Graphical User Interface (GUI) to run the converter.]

-hdrL:{n}

['-hdrLines:n' to include in header. The last line

row is the one searched for mapping column URLs.

Default is 1 line.]

-hdrM:{oldHdrColName,newHdrColName}

['-hdrMapName:{oldHdrColName,newHdrColName}' to map

an old header column name {oldHdrColName} to a new

name {newHdrColName}. There may be multiple instances

of this switch. Default is to not do any mappings.]

-joinT:{joinTableFile}

['-joinTableFile:{joinTableFile}' adds the contents of

the {joinTableFile} file to the table being processed.

This allows us to add fields that can be used for

sorting the new table by the {joinTableFile} data

if it is defined. This switch can not be used with

the -fastEditFile option. Default is not to join

any tables.]

-keepColumn:{colName}

['-keepColumn:{colName}' specifies which columns

to keep in multiple instances of the switch.

Then, when the Table is processed, it drops all

columns not listed. It may be used as an

alternative to -dropColumn as the Table may have

unknown column names. Default is not active.]

-help (or '?')

[print instructions to see the README.txt file.]

-hrefD:{colName,Url,mapToken}

['-hrefData:{colHdrName,Url,(optional)mapToken}' to

get the mapping of column header name and the Url to use

as a base link to use for making a URL for Table data

in that column. It makes the URL by appending the data

in cells in that column to the Url. ([TODO] If

the optional mapToken is specified, then replace the cell

contents for the occurance of the mapToken in Url.)

There can be multiple instances of this switch. See

the following switch '-hrefHeaderRow' to change the mapping

from Table data to header rows. ]

-hrefHeaderRow

['-hrefHeaderRowMapping' is used with the above switch

'-hrefData:{colHdrName,Url,(optional)mapToken}' to map

the data in the header row(s) instead of the data in

the Table data columns. It searches the first column of

the header rows to find the colHdrName to determine

the row to be mapped to that colHdrName. Unlike the

-hrefData option, the colHdrName can be embedded within

a string. The default is not to map the header rows.]

-inputD:{input directory}

['-inputDirectory:{input dir}' where the input

tab-delimited table .txt files to be converted are

found. By convention, we name other text files that

we may need, and want to keep in the inputDirectory

but do not want to convert to HTML, with a '.map'

file extension. Examples of non-data files include

'paramXXX.map', 'prolog.html', 'epilogue.html', etc.,

Default directory is 'data/'.]

-limitM:{maxNbrRows,(opt.)sortFirstByColName,(opt.)'A'scending or 'D'escending}

['-limitMaxTableRows:{maxNbrRows,(opt.)sortFirstByColName},

(opt.)'A'scending or 'D'escending}' to limit the number of

rows of a table to {maxNbrRows}. If the {sortFirstByColName}

is specified, then sort the table first before limit the

number of rows. Default is not to limit rows.]

-log:{new log file name}

['-logName:{new log file name}' to log all

information about the processing to the console and

then to save this output in a log file. The new file

must end in ".log". Default is to use the

"HTMLtools.log" file name.]

-makeI:{colName1,colName2,...,colNameN}

['-makeIndexMapFile:{colName1,colName2,...,colNameN}' to

make an index map Table file (same name as the input file

but with an .idx file extension) of the input file (or the

file output from -saveEditedTable2File after the input

table has been edited). The index file will contain the

specified columns in the column-list followed by the

StartByte, EndByte for data in the input table with those

column values. This file can then be used to quickly

index a huge input file probably using a Hash table of

the selected column names instances to lookup the

(start,end) file byte pointers to random access the

large file. The software to use the index file is not

part of HTMLtools at this time.

The default is not to make an index map file.]

-makeM:{makeMapTblFileName,orderedCommaColumnList}

['-makeMapFile:{makeMapTblFileName,orderedCommaColList}'

used with -concatTable command to also make a map

file at the same time. This switch is only used

with -concatTable. Default is no map is made.]

-makeP

['-makePrefaceHTML' to make a separate preface

HTML file from the input text proceeding the table

data. The file has the same name, but has a

"preface-" added to the front of the file name. The

first generated HTML file is then linked from the

second generated file. Default no preface file.]

-makeS

['-makeStatisticsIndexMapFile' to make a 'Statistics Index Map'

table file with the same base file name as the index map (.idx)

but with a .sidx file extension. It is invoked after the

IndexMap file is created (using the '-makeIndexMapFile' switch).

Therefore, it must be specified in a subsequent command line

(if using batch). Default is not to make a Statistics Index Map.]

-mapD:{$$keyword$$,toString}

['-mapDollarsigns:{$$keyword$$,toString}' to

map cell data of the form '$${keyword}$$' to

{toString}. The preface, epilogue as well as the

table cell data is checked to see if any keywords

should be mapped. There may be multiple instances

of this switch. Default is to not do any mappings.]

-mapH:{mapHdrNamesFile,fromHdrName,toHdrName}

['-mapHdrNames:{mapHdrNamesFile,fromHdrName,toHdrName}

to map header names. E.g., map long to short header

names, or map obscure to well-defined header names.

The map file (specified with a relative path) is a

tab-delimited and must contain both the {fromHdrName}

and {toHdrName} entries. Default is no mapping.]

-mapO

['-mapOptionsList' to map ;; delimited strings

to inactive <OPTION> pull-down option lists.

Default is no mapping to option lists.]

-mapQ

['-mapQuestionmarks:{??keyword??,toString}' to

map cell data of the form '??{keyword}??' to

{toString}. If the toString is BOLD_RED,

BOLD_GREEN, or BOLD_BLUE, then just map the

all ??{keyword}?? string to bold and red (green,

or blue). The preface, epilogue as well as the

table cell data is checked to see if any keywords

should be mapped. There may be multiple instances

of this switch. Default is to not do any mappings.]

-noB

['-noBorder' to set no border for tables. The

default is there is a 'BORDER=1' in the TABLE.]

-noHeader

['-noHeader' set no header for tables. The

default is there is a header in input file.]

-noHTML

['-noHTML' set to not generate HTML if it would

normally do so. This switch disallows generation of

HTML when doing a input file processing if that

operation also allows HTML generation. This is useful

if doing editing of large input files to generate

index maps or saved files. The default is to allow

the generation of HTML..]

-outputD:{output directory}

['-outputDirectory:{output directory}' to set the

output directory. The default directory is 'html/'.]

-reorderC:{colName,newColNbr}

['-reorderColumn:{colName,newColNbr}' to reorder

this column to the new column number. You may

specify multiple new columns (they must be

different). Those columns not specified are moved

toward the right. This is done after the list of

dropped columns has been processed. There can be

multiple instances of this switch. Default is not

to reorder columns.]

-reorderR

['-reorderRemainingColumnsAlphabeticly' used if doing

a set of -reorderColumn operations, sort the remaining

columns not specified, but that are used, alphabetically.

Default is not to sort the remaining columns.]

-rmvT

['-rmvTrailingBlankRowsAndColumns' in the table.

Default is not to remove trailing blank lines or

trailing blank columns.]

-saveE:{outTblFile,opt. "HTML"}

['-saveEditedTable2File:{outTblFile,opt. "HTML"}'

to make a Table file from the modified input

table stream. It is created after the Table is

edited by -dropColumns, -keepColumns,

-reorderColumns, -sortRowsByColumn. If the outTblFile

is not specified (i.e., ":,") then the input file name

with the name from the input file with the postfile

name from the '-addOutfilePostfix:{postfix name}' is

used. If the "HTML" option is set, it also outputs the

HTML when doing this operation. Note that the switch

should not be used with '-fastEditFile:{opt. output file}'

which can be used for converting very large files

without generating the HTML file. Default is not to save

the Table.]

-searchGui

['-searchGui' to invoke the graphical user interface for the

database search engine to generate a flip table. See

Search Database GUI generating specialized reports.

Also see Example-17

for examples of the default parameter file used as the

basis of the flip table generated. Default is no search GUI.]

-shrinkB:{opt. size for big,opt. font size decrement}

['-shrinkBigCells:{opt. size for big,opt. font size

decrement}' in the Table with more than the big

threshold number of characters/cell by decreasing

the font size to -5 (or the opt. font size

decrement) for those cells. The big size threshold

defaults to 25 characters. Setting the threshold to

1 forces all cells to shrink. Default is not to

shrink cells.]

-showDataHeatmapFlipTable

['-showDataHeatmapFlipTable' used to generate colored heat-map

data cells in a HTML conversion for a flip table using the

'-flipTableByIndexMap' option. It uses the global statistics

on the (digital) data in the Statistics Index Map .sidx file

if it exists to normalize the data and generate a cell color

background range in 7 quantiles of colors: dark green,

medium green, light green, white, light red, medium red, dark red.

Default is not to generate the colored heatmap.]

-sortFlip:{col data name}

['-sortFlipTableByColumnName:{col data name}' specifies the name

of field in the flip table to use in sorting by column data in

descending order in the generated table. It is used with the

'-flipTableByIndexMap' option. Note this name can be any of the

flipped header column values (multiheader data names}. When doing

the sort it matches the specified name with any of the header

rows to find the column to use for the sort. Default is not to

sort the generated flip table.]

-sortR:{colName,'A'scending or 'D'escending}

['-sortRowsByColumn:{colName,'A'scending or specified column.

You can specify 'Ascending' or 'D'escending. This is done after

any columns have been dropped or reordered. Default is not to

sort columns. If the column is not found, don't sort - just

continue. You can have multiple instances of the switches. If the

first column name is not found, it looks for the second, etc.

and only ignores the sort if no column names are found. Default

is not to sort the table.]

'-startT:{keyword}

['-startTableAtKeywordLine:{keyword} specifies the start

of the last line of a Table header by a keyword that

is part of any of the fields in that line. This is

useful when reading a file with complex preface info

with possibly multiple blank lines. It can be used

with the '-hdrLines' switch to specify multiple

header lines. Default no keyword search.]

-tableD:{tablesDirectory}'

['-tableDir:{tablesDirectory}' to set the various mapping

tables directory. These tables are used during various

conversion procedures. They include both the .txt and

the .map file (same file, but with different extensions).

Examples include: EGMAP.map(.txt), ExperimentGroups.map(.txt)

mAdbArraySummary.map(.txt). The default directory is

'data.Table/'.]

-useOnly

['-useOnlyLastHeaderLine' to reduce the number of header

lines to 1 even if there are more than 1 header line.

Default is to use all of the header lines.]

3.1 GenBatchScripts COMMANDS Extension

These commands are used to create batch scripts for subsequent use by

HTMLtools. This set of commands is called the GenBatchScript commands.

The GenBatchScript process is described in

Section 1.1. The -genBatchScript command is only used to generate these

batch scripts in a set of structured trees suitable for copying directly to a

Web server. It uses a test-ToDo-list.txt Table to specify a list of tests, column

"Test-name", a column "Relative directory" where the data is to be saved and some

documentation columns "Page label", "Page description", and "Tissue name"

that are used for helping generate the Summary HTML Web pages and

params .map files used in the subsequent conversion of the .txt Table

data files to HTML documentation. See Example 15.

for am example of a params.map file using the GenBatchScripts commands.

*** REWRITE and EDIT more detailed and generalized description ***

-genBatch:{batchDir,paramScriptsDir,inputTreeDir,summaryDir,analysisDir}'

['-genBatchScripts:{batchDir,paramScriptsDir,inputTreeDir,

outputTreeDir,analysisTreeDir,JTVDir}' to generate a set

of scripts to batch convert a set of tab-delimited Table

test data files specified by the -genTestFile:{testToDoFile}

Table in the {batchDir} directory. It generates a set of

parameter .map files in the {paramScriptsDir} directory. It

also generates a set of summary HTML Web pages in {summaryDir}

that describe the data, one page for each type of tissue,

and (pre) generates links to data that will be generated in

the {analysisTreeDir} when the batch script is subsequently

run. These new params .map files can then be run by a converter

batch file called buildWebPages.doit started with a

Windows buildWebPages.bat BAT file to start the batch

job (both files are in the batchDir directory along with a

copy of HTMLtools.jar). The buildWebPages.bat file

could easily be edited to run on MacOS-X or Linux. The paths

created in the {inputTreeDir}, and {analysisTreeDir} base paths

use the "Relative Directory" data in the {testToDoFile} within

those directories. This generated batch .doit script will

process a data set to generate a set of HTML pages and

converted database .txt files defined by the {testToDoFile}

Table database. Default is no batch script generation.

Additional switches required with -genBatchScripts are:

-genTestFile, -genMapEGdetails, and -genMapEGintroduction]

-genC:{support file}

['-genCopySupportFile:{support file}' to specify a list

of support files to copy to the output batchDir (e.g.,

'-outputDir:batchScripts'). The support files are

specified with a list created using multiple instances of

-genCopySupportFile:{support file}. Default is no support

files to copy.]

-genMapEGd:{EGdetailsMapFile}

['-genMapEGdetails:{EGdetailsMapFile}' specifies the

'details' Table used when the -genBatchScripts switch is

invoked. This is required when the -genBatchScripts switch

is used.]

-genMapIntro:{introductionMapFile}

['-genMapIntroduction:{introductionMapFile}' specifies the

'Introduction' Table used when the -genBatchScripts switch

is invoked. This is required when the -genBatchScripts

switch is used.]

-genP:{name,paramTemplateFileName}

['-genParamTemplate:{name,paramTemplateFileName}' to

specify a list of parameter map Templates that are used

for mapping the test-ToDo-list data so that (param-MRR,

param-MRR-keep, param-JTV) etc. dynamically. These are

then mapped into the following keywords that may appear in

any of these templates: $$TISSUE$$, "$$TEST_NAME$$",

"$$MRR_FILE$$", $$DESCRIPTION$$, $$PROLOG$$, $$EPILOG$$,

$$DATE$$. Multiple unique instances are allowed. The

default is no parameter templates.]

-genS:{orderNbr,templateFileName}

['-genSummaryTemplate:{orderNbr,templateFileName}' to define

a list of Summary Templates that are used for mapping the

test-ToDo-list data so that (summaryProlog, summaryExperimental,

summaryAnalysis, summaryFurtherAnalysis, summaryEpilogue)

etc. dynamically. Set by -genSummaryTemplate:{orderNbr,

templateFileName} instances that can be used to generalized

the currently hardwired. These are then mapped into the

following keywords that may appear in any of these templates:

$$TISSUE$$, $$LIST_EXPR_GROUPS$$, $$DESCRIPTION$$,

$$ANALYSIS$$, $$FURTHERANALYSIS$$, $$DATE$$. The

$$INTRODUCTION$$ is extracted from the {"CellTypeTissue.map"}.

Default is no templates being defined. Multiple instances

are allowed where they are concatenenated by the orderNbr

associated with each template.]

-genTest:{testToDoFile}

['-genTestFile:{testToDoFile}' specifies the tests to do

when the -genBatchScripts switch is invoked. This is

required when the -genBatchScripts switch is used.]

-genTree:{sourceTreeDir,destDir}

['-genTreeCopyData:{sourceTreeDir,destDir}' to copy an

input data tree data to batch scripts subdirectory.

There can be multiple instances of this option.

Default is to not copy tree data.]

3.2 Tests-Intersection COMMANDS Extension

These Tests-Intersections subset of commands are only used to create

Tests-Intersection tables from mAdb Retrieval Reports (MRR) containing fold-change

data from the Tests-ToDo database used with GenBatchScripts. The primary command to

invoke this is the makeTestsIntersectionTbl switch. These Tests-Intersection commands

can be used with the regular HTML or table editing commands such as

'-noHTML' and/or '-saveTable' switches. If HTML is generated, then the

'-addProlog' and '-addEpilogue', '-mapQuestion', and '-mapDollar', '-sortByColumn',

-limitMaxTableRows, etc. See Example 13 for

an example of generating a Tests-Intersection tab-delimited table and HTML

Web page.

-addFCranges

['-addFCrangesForTestsIntersectionTable' may be used when

generating a table Tests-Intersection Table using the

'-makeTestsIntersectionTbl:{testsToDoFile}'. This switch

does a simple fold-change (FC) row analysis after the

Tests-Intersection Table is created by adding ("Min FC"

"Max FC" "FC Range") data for each row. Because this

extends the table, you can sort by any of these fields.]

-addRange

['-addRangeOfMeansToTItable' to add the ("Range Mean A",

"Range Mean B" and "FC counts %") computations to an expanded

Tests-Intersection Table table. Default is to not add these

fields.]

-filterData:{dataTableField,d1,d2,...,dn}

['-filterDataTestIntersection:{dataTableField,d1,d2,...,dn}' that

is used with the '-makeTestsIntersectionTbl:{testsToDoFile}' to

filter the MRR rows using the specified MRR {dataTableField}

and use it if it matches any of {d1,d2,...,dn} substrings.

The default is not to filter the Tests-Intersection Table.]

-filterTest:{testTableField,d1,d2,...,dn}

['-filterDataTestIntersection:{testTableField,d1,d2,...,dn}' that

is used with the '-makeTestsIntersectionTbl:{testsToDoFile}' to

filter the Tests-ToDo table rows using the specified {testTableField}

and use it if it matches any of {d1,d2,...,dn} substrings.

The default is not to filter the Tests-Intersection Table.]

-makeT:{testsToDoFile,testsInputTreeDir}

['-makeTestsIntersectionTbl:{testsToDoFile}' that

generates a table Tests-Intersection Table that contains

data from the individual tests from the tests input data tree

specified by the tests in -tableDir directory in the

{testsToDoFile} which specifies the relative data file tree.

The tree is found in -inputDir directory. The data files in

the tree are used as input data. The computed table is

organized by rows of +FC genes/Feature-IDs and -FC

genes/Feature-IDs. The data from the {testsToDoFile} is used

to get additional information for each test as follows.

This switch is used with the '-noHTML' and/or '-saveTable'

switches. If HTML is generated, then the '-addProlog' and

'-addEpilogue', '-mapQuestion', and '-mapDollar' can be used.

You can filter the MRR rows using the

'-filterDataTestIntersection:{dataTableField,d1,d2,...,dn}' and

the {testsToDoFile} test data using the

'-filterTestTestIntersection:{testTableField,d1,d2,...,dn}'.

The default is not to make the Tests-Intersection Table. You

can do a simple FC row analysis by adding ("Min FC" "Max FC"

"FC Range") for each row using the

'-addFCrangesForTestsIntersectionTable' switch.]

3.3 Java TreeView COMMANDS Extension

These commands are only used to convert Java TreeView (JTV) mAdb heatmap data

files for use on the Jak Stat Prospector Web site. In addition to mapping the

sample names from mAdb names to GSP ID names, it reorders the data so that the

"gene - gene description" appears first rather than the "WID: #" in the "NAME"

field. It also changes the contents of the "YORF" field data to the

"gene - gene description" data so that when mousing over a heatmap cell in

the zoom window, the upper left-hand corner displays the "gene - gene description"

for the row and the GSP ID sample for the column.

Reorder:

"WID:... || xxxxxx_at || MAP:... || gene -- geneDescr. || RID:..."

to

"gene -- geneDescr. || xxxxxx_at || WID:... || MAP:... || RID:..."

You can not mix tab-delimited file to HTML conversions with JTV conversions in

the params .map files.

-jvtB:{button name for JTV activation button}

['jvtButtonName:{button name for JTV activation button}'

that may be used with '-jtvHTMLgenerate' to label the

button to activate Java TreeView. The default is

"Press the button to activate JTV".]

-jtvC:{JTV jars directory}

['-jtvCopyJTVjars:{JTV jars directory}' to copy the

JTV jar files and plugins to the jtvOutputDir.

The default is no copying of the .jar files.]

-jvtD:{description text for prologue}

['-jvtDescription:{description text for prologue}'

that may be used with '-jtvHTMLgenerate' to insert

additional text into the prolog where it replaces

$$DATA_DESCRIPTION$$. The default is no description.]

-jtvFiles:{f1,f2,...,fn}

['-jtvFiles:{f1,f2,...,fn}' to specify list of files

here rather than all in all of the files in the

jtvInputDir. You can have multiple instances of this

switch.]

-jtvH:

['-jtvHTMLgenerate' to generate a HTML file to invoke

the JTV applet for each JTV specification in the

jtvInputDir. It puts the HTML file in the jtvOutputDir.

Some of the non-JTV HTML modification switches are

operable including: '-addEpilogue', '-addOutfilePostfix',

'-addProlog', '-mapQuestionmarks'. The default is to not

generate JTV HTML.]

-jtvI:{input JTV directory}

['-jtvInputDir:{input JTV directory}' to set the

input directory of JTV sub directories. This contains

the zipped or unzipped JTV files downloaded from mAdb.

Each zip file contains 3 files with (.atr,.cdt,.gtr)

extensions. Default directory is 'JTVinput/'.]

-jtvO:{output JTV directory}

['-jtvOutputDir:{output JTV directory}' to set the

output directory of JTV sub directories. The converted

JTV directory and a corresponding HTML file are saved

there. Default directory is 'JTVoutput/'.]

-jtvN:{mAdbArraySummary,mapHdrNamesFile,fromHdrName,toHdrName}

['-jtvMapping:{mAdbArraySummaryFile,mapHdrNamesFile,

fromHdrName,toHdrName}' to convert a list of sub

directories of JTV file sets by reading the three files

from the each of the subdirectories in the jtvInputDir

directory. The {mAdbArraySummaryFile} and {mapHdrNamesFile}

are specified with a relative path. It maps the .cdt file

in each sub directory to use the {toHdrName} column of

the equivalent mapNamesFile map Table instead of

the "EID:'mAdb ID'" as generated by mAdb. The mapping

between "mAdb ID" and short array names is done using

the {fromHdrName} column of the jtv_mAdbArraySummaryFile

Table map. It then writes out the JTV subset to a created

sub directory in jtvOutputDir that has the same base

name as the input JTV subdirectory being processed.

See the optional switches: '-jtvInputDir:{jtvInputDir}'

and '-jtvOutputDir:{jtvOutputSubDir}' to set the

directories to other than the defaults ("JTVinput" and

"JTVoutput"). The values for {fromHdrName} and {toHdrName}

should be in the of mapNamesFile.]

-jtvR [TODO]

['-jtvReZipConvertedFiles' to reZip the converted files

in the output JTV directory in a file with the same name.

Default is not to zip the converted files.]

-jtvTableDir:{tablesDirectory}'

['-jtvTableDir:{tablesDirectory}' to set the various mapping

tables directory. These tables are used during various

conversion procedures. They include both the .txt and

the .map file (same file, but with different extensions).

Examples include: EGMAP.map(.txt), ExperimentGroups.map(.txt)

mAdbArraySummary.map(.txt). Note: this switch is used when

processing JTV files, but may also be set with the

'-tableDir:{tablesDirectory}' switch. The default directory

is 'data.Table/'.]

4. EXAMPLES

We demonstrate running the program with a set of examples (and a few sub

examples). The first (1 through 8) are for converting tab-delimited .txt

files to .html files. Example 9 illustrates remapping sample labels for a Java

TreeView conversion. Example 10 shows how these examples can be run by specifying

a list of parameter .map files using a batch command. Example 11 illustrates

editing a very large .txt file into another .txt file using the fast edit command.

Example 12 illustrates URL mapping the header data in a transposed table.

Example 13 generates a Tests-Intersection .txt and .html table from the tests data

also used in Example 13. Example 14 illustrates generating a flipped table with

hyperlinked multi-line headers with data filtered by rows and column name filters.

Example 15 illustrates generating a set of batch jobs to

convert data described in a table file generating summary Web pages, a set of params

.map files in a tree structure. Example 16 is used for preparing a database

and an Index Map files for used in the GUI based database search shown

in Example 17.

Example 1.

The program with no arguments uses the defaults described above. I will look

for tab-delimited .txt files in the default input directory (data/) and save

the generated HTML files in the output directory (html/). It also looks for

the default template files prolog.html and epilogue.html in the current directory.

================================================================

HTMLtools

Example 2.

The defaults for Example 1 are shown explicitly here. [The '\' indicates

line continuations in Unix for ease of reading here, but they should all be

on the same line when the command is issued from the command line unless

line continuation charactes are used for your particular operating system.]

================================================================

HTMLtools -addProlog:prolog.html -addEpilogue:epilogue.html \

-inputDir:data -outputDir:html -tableDir:data.Table

Example 3.

This gets the arguments from the default data/params.map file if

it exists. These params .map files are generally kept in the in the same

directory as the .txt input files to be converted. We could use any other

file extension except .txt (since we are converting all .txt files found in

the data directory). So by convention we use the .map file extension instead.

================================================================

HTMLtools data/params.map

Example 4.

This uses a simple spreadsheets with row background colors

alternated between the prolog background color and white, big

cells have their font shrunk, trailing blank rows are removed,

The switches are in file 'params-GSPI-EG.map'. The

'-extractRow:"Experiment Group ID (1),1,data.Table/ExperimentGroups.map,DL"'

switch tells it to extract the row that matches data in column

"Experiment Group ID (1)" in the table with the same column name

in the ExperimentGroups.map file column (row 1) and generate a

>DL< list in the epilogue. This lets you use data from

a meta-database table to document each of the individual tables being

converted.

E.g., GSP-Inventory.xls EG samples

data saved from Excel worksheets. Note: you must double quote arguments that use

spaces.

===============================================================

HTMLtools data.GSPI-EG/params-GSPI-EG.map

where: data.GSPI-EG/params-GSPI-EG.map

contains:

#File:params-GSPI-EG.map

#"Revised: 3-30-2009"

#

-addPrologue:data.GSPI-ExpGrp/prolog.html

-addEpilogue:data.GSPI-ExpGrp/epilogue.html

-addRowNumbers

-addTableName:"GSP Experiment Group Samples"

-inputDir:data.GSPI-EG

-outputDir:html/GSP/GSP-Inventory/HTML

-tablesDir:data.Table

#

-extractRow:"Experiment Group ID (1),1,data.Table/ExperimentGroups.map,DL"

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-rmvTrailingBlankRowsAndColumns

#

#"----------- End --------- "

Example 4.1

Example to generate a concatenated .txt file from a

set of simple spreadsheets. Row background colors are alternated,

big cells have their font shrunk, trailing blank rows are removed.

E.g., single table from set of EG001.txt to EG0nn.txt single files with single

row headers from the GSP-Inventory.xls

data saved from Excel worksheets. They are concatenated to file "EGMAP.txt".

The switches are in file 'params-GSPI-EG-concat.map'.

Note: you must double quote arguments that use spaces.

==================================================================

HTMLtools data.GSPI-EG/params-GSPI-EG-concat.map

where: data.GSPI-EG/params-GSPI-EG-concat.map

contains:

#File:params-GSPI-EG-concatTXT.map

#"Revised: 3-29-2009"

#

-addPrologue:data.GSPI-EG/prolog.html

-addEpilogue:data.GSPI-EG/epilogue.html

-addRowNumbers

-addTableName:"GSP Inventory Concatenated List of all EG Samples"

-inputDir:data.GSPI-EG

-outputDir:data.Table

-tablesDir:data.Table

#

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-rmvTrailingBlankRowsAndColumns

#

#"Save the concatenated data in the following file."

#-concatTables:EGALLDataSet.txt

-concatTables:EGMAP.txt,noHTML

#

#"----------- End --------- "

Example 4.2

This example extends Example 4.1 to generate a HTML file of a concatenated

set of .txt files. They are concatenated to the "EGMAP.html" file.

The switches are in file 'params-GSPI-EG-concat.map'. Note the use of

the 'noTXT' argument in the '-concatTables' switch. Row background colors

are alternated, big cells have their font shrunk, trailing blank rows are

removed. The switches are in file

'data.GSPI-EG/params-GSPI-EG-concatHTML.map'. Note: you must double quote

arguments that use spaces.

==================================================================

HTMLtools data.GSPI-EG/params-GSPI-EG-concatHTML.map

where: data.GSPI-EG/params-GSPI-EG-concatHTML.map

contains:

#File:params-GSPI-EG-concatHTML.map

#"Revised: 3-29-2009"

#

-addPrologue:data.GSPI-ExpGrp/prolog.html

-addEpilogue:data.GSPI-ExpGrp/epilogue.html

-addRowNumbers

-addTableName:"GSP Inventory Concatenated List of all EG Samples"

-inputDir:data.GSPI-EG

-outputDir:html/GSP/GSP-Inventory/HTML

-tablesDir:data.Table

#

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-rmvTrailingBlankRowsAndColumns

#

#"Save the concatenated data in the following file."

#-concatTables:EGALLDataSet.txt

-concatTables:EGMAP.txt,noTXT

#

#"----------- End --------- "

Example 4.3

This example (extends Example 4.1) to generate

a map file from concatenated .txt files from a set of simple spreadsheets

using the -concatTable switch. The generated file is saved in file

data.Table/EGMAP.map. Alternatively, the .map file could be specified with

the '-mapHdrNames' switch to restrict the columns to appear in the

generated .map file. There is no HTML file generated. The switches are in file

'data.Maps/params-Maps-EGMAP-map.map'. Note: you must double

quote arguments that use spaces.

==================================================================

HTMLtools data.Maps/params-Maps-EGMAP-map.map

where: data.Maps/params-Maps-EGMAP-map.map

contains:

#File:params-Maps-EGMAP-map.map

#"Revised: 3-30-2009"

#"Generate the EGMAP.map file, but no HTML file."

#

-addRowNumbers

-addTableName:"Concatenation of all GSP Experiment Groups tables."

-inputDir:data.Table

-outputDir:data.Table

-tablesDir:data.Table

-files:"EGMAP.txt"

#

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-rmvTrailingBlankRowsAndColumns

#

-concatTables:EGMAP.map,noHTML

#

#"---------- end ---------"

Example 5.

This uses a simple spreadsheet with mapping some of the

cells to colored bold fonts. Row background colors are alternated, big

cells have their font shrunk, trailing blank rows are removed.

The switches are in file 'params-GSPI-ExpGrp.map'.

E.g., GSP-Inventory.xls

'ExperimentGroups' sheet describing samples data saved from Excel

worksheets. Note: you must double quote arguments that use spaces.

==================================================================

HTMLtools data.GSPI-ExpGrp/params-GSPI-ExpGrp.map

where: data.GSPI-ExpGrp/params-GSPI-ExpGrp.map

contains:

#File:params-GSPI-ExpGrp.map

#"Revised: 3-30-2009"

#

-addPrologue:data.GSPI-ExpGrp/prolog.html

-addEpilogue:data.GSPI-ExpGrp/epilogue.html

-addRowNumbers

-addSubTitleFromInputFile

-addTableName:"GSP Experiment Groups Details"

-files:"ExperimentGroups.txt"

-inputDir:data.Table

-outputDir:html/GSP/GSP-Inventory/HTML

-tablesDir:data.Table

#

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-rmvTrailingBlankRowsAndColumns

#

-mapOptionsLists

-mapQuestionmarks:WHO,BOLD_RED

-mapQuestionmarks:WHAT,BOLD_RED

-mapQuestionmarks:WHEN,BOLD_RED

#

#"----------- End --------- "

Example 5.1

This example is an extension of Example 5., except

that it uses the '-exportBigCellsToHTMLfile:200' to export large cells with more

than 200 characters to separate small HTML files and generate hyperlinks

to those small files in the affected cells. This makes the spreadsheet more

readable when there are some cells that have a large number of characters.

The switches are in file 'params-GSPI-ExpGrp-exportBigCells.map'.

E.g., GSP-Inventory.xls 'ExperimentGroup'

samples data saved from Excel worksheets. Note: you must double quote arguments

that use spaces.

==================================================================

HTMLtools data.GSPI-ExpGrp/params-GSPI-ExpGrp-exportBigCells

where: data.GSPI-ExpGrp/params-GSPI-ExpGrp-exportBigCells.map

contains:

#File:params-GSPI-ExpGrp-exportBigCells.map

#"Revised: 3-30-2009"

#

-addOutfilePostfix:"-BRC"

-addPrologue:data.GSPI-ExpGrp/prolog.html

-addEpilogue:data.GSPI-ExpGrp/epilogue.html

-addRowNumbers

-addSubTitleFromInputFile

-addTableName:"GSP Experiment Groups Details"

-inputDir:data.Table

-outputDir:html/GSP/GSP-Inventory/HTML

-tablesDir:data.Table

-files:"ExperimentGroups.txt"

#

-alternateRowBackgroundColor:white

-shrinkBigCells:25,-5

-mapOptionsLists

-mapQuestionmarks:WHO,BOLD_RED

-mapQuestionmarks:WHAT,BOLD_RED

-mapQuestionmarks:WHEN,BOLD_RED

-rmvTrailingBlankRowsAndColumns

#

-exportBigCellsToHTMLfile:200

#

#"----------- End --------- "

Example 6.

Example using a 2 sub-table spreadsheet with the first and

second tables being separated by a blank line. This example also

allows 2-line headers, dropping some of the columns, and mapping